we typically reply in under 30 minutes

The real-time

data platform for

observability

Most monitoring tools do too much

They install an agent or poll data through an API, send them to their time-series database, and then provide you with dashboards and charts to look at. This may seem good at first, but it causes more pain than cure when it comes time to scale up to terabytes per day!

Data visibility

Latency and throughput

Pricing model

Our approach is simple: ingest the OpenTelemetry data in an S3 bucket as Parquet files in Iceberg table format and query them using DuckDB with milliseond retrieval and zero egress cost.

Let's talk about why we went with certain architectural choices

"This will never work". We've heard this a lot of times. We got a ton of advice to build this on top of ClickHouse. In fact, almost all modern day startups in this space use ClickHouse. A couple of issues that were raised in our choice of tech stack:

Scaleble, fault-tolerant and efficient data lakehouse

Our approach is opinionated, offering tools and systems for monitoring and security. This means that you do not have to build another observability pipeline, provision a Kafka cluster, or manage Spark jobs. We will take care of chaining everything for you.

OpenTelemetry

The gold standard in data collection

Apache Flink

Stateful streaming analytics on cold S3

Apache Arrow

Efficient analytics on larger than memory datasets

SQLAlchemy

Publish query results as low-latency APIs

Join our community of early adopters and advocates and help us prioritize

238K

218

88

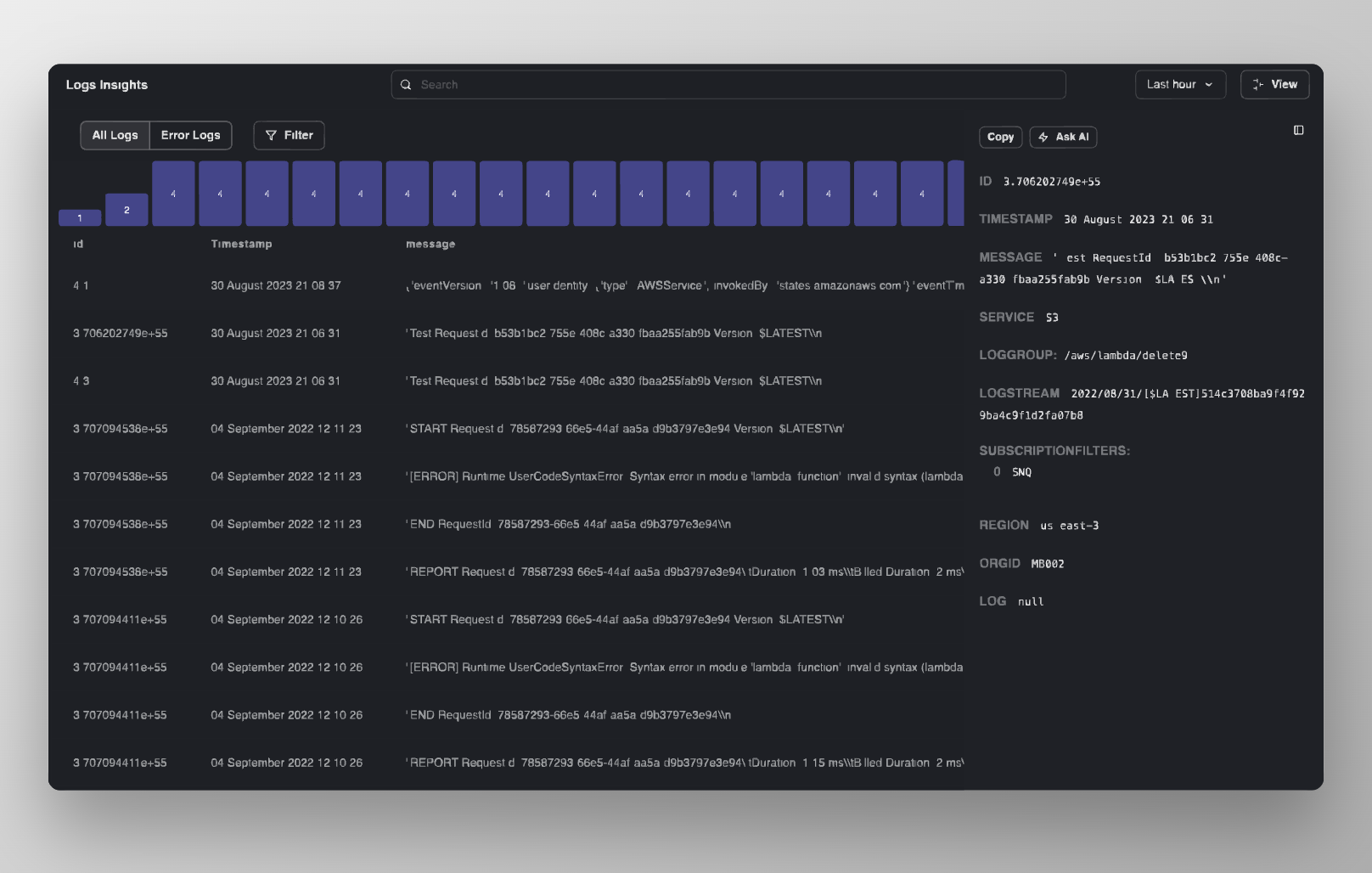

Log Management — done.

Infrastructure Monitoring — done.

Cloud SIEM — done.

Distributed Tracing — in-progress.

Email & Slack Alerts — in-progress.

Cloud CSPM — in-progress.

Continuous Profiler — planned.

Cloud Service Map — planned.

Cloud Asset Discovery — planned.

Collaborative Workspace — done.

Keyboard Shortcuts — done

Custom Otel Agent — in-progress.

Linear & GitHub Sync — in-progress

Real User Monitoring — planned.

Synthetic Monitoring — planned.

Ready to roll with us?

The realtime data platform

for engineering teams

Our mission is to make your work easier.

We make sure you never have to slog through logs again.